The Honest AI Adoption Playbook: Lessons From a Company That Doesn’t Drink the Kool-Aid

If you have read our online reviews and customer testimonials, you will notice a theme: we value transparency and candid conversations … even if it results in walking away from a project. That’s the kind of company we are. In this blog post, we will share our honest take on AI Adoption after working with various AI technologies and on real-world implementations.

Step 1. Start with skepticism, not awe

We are living through the AI gold rush. It rewards noise, not nuance. Can you scroll through your LinkedIn feed for just thirty seconds and not see several posts about “revolutionizing workflows”, “7-day app development” and other shiny promises? In a bid to wear the “AI-enabled” badge and to combat the FOMO, companies – especially the product-centric ones – are skipping the messy middle: integrations, governance, sustainability and measurable value.

We have been AI janitors (to put it politely) where the client asked us to organize and clean up AI generated code because they are unable to add a single new feature without creating chaos elsewhere.

We believe that real success with AI doesn’t come from shouting the loudest; it comes from listening carefully, experimenting cautiously, and being able to pull the plug when it doesn’t work.

Step 2. Build capabilities before building your models

Let’s be honest. When a company says “we built an AI model” it’s often a polite exaggeration. What they actually built is an interface to a model someone else trained. And this distinction is what differentiates the real from the gimmick.

Anyone can use models through APIs like OpenAI, Anthropic, Gemini, etc, and inject them in workflows, products, and services. But very few understand how to fine-tune a model, prevent hallucinations, create safe abstractions, achieve a model-agnostic architecture and close the loop with human (non-AI) feedback.

These foundational capabilities are prerequisites before you start injecting AI into your products.

Step 3. Depth over Demos

Most AI “proof-of-concepts” (including ours) exist to impress, not to endure. They tend to hide the ugly details – sometimes the Achilles’ heel – behind the scenes.

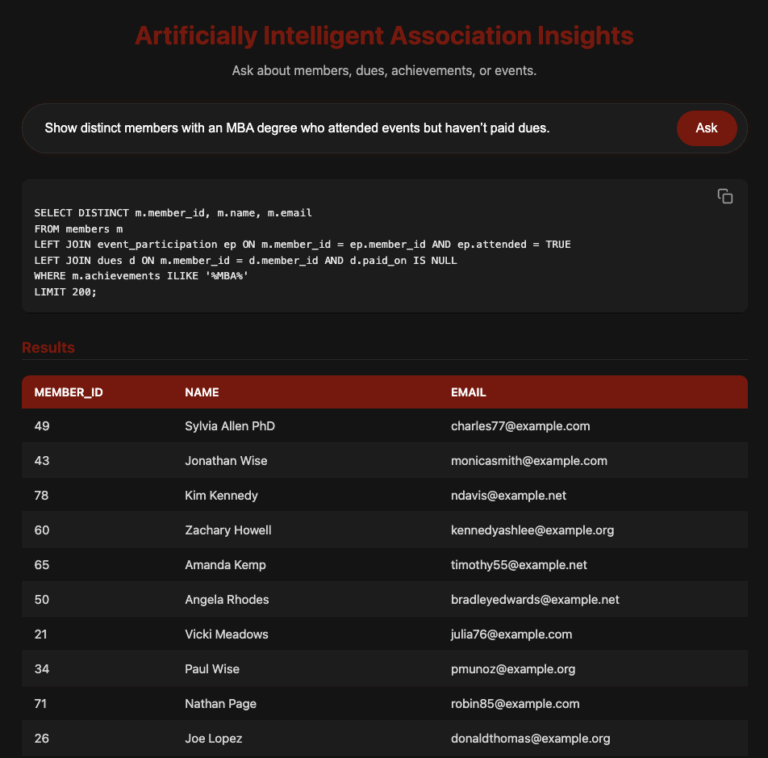

We built an AI Natural Language Query tool (entirely using OpenAI’s GPT-5 – told you, we are brutally honest) that lets users query an association’s database in plain English, like “How many members have an MBA but have not participated in our events”. It works great and we have been able to create a lot of interest.

The ugly truth of the tool (in its current form) is that the database schema (table names, fields etc.) will need to be passed to the model (GPT-5) through a prompt. Some companies might be fine with this; most won’t because it exposes internal structure to an external system (OpenAI).

This is where depth comes to rescue. Being able to detect the weaknesses, surfacting privacy concerns, and engineering abstraction layers (to hide sensitive data) comes from good software engineering training and discipline. If you can interleave systems design knowledge (depth) with AI productivity gains, you got the edge. Without depth you just have an insecure vendor-dependent product which won’t survive real-world scrutiny.

Step 4. Humans in the Loop

As an organization that has fully embraced AI, we can say with certainty that the benefits of adopting AI are immense. But like Uncle Ben said, “With great power comes great responsibility”.

There are companies out there that present a Utopian angle to using AI tools – “Perform complex integrations with a click”, “Let AI build your product in days, not months” – these claims are exactly what they sound like – not true. Remember Builder.ai? They compared app development to ordering a pizza. It’s a cautionary tale now.

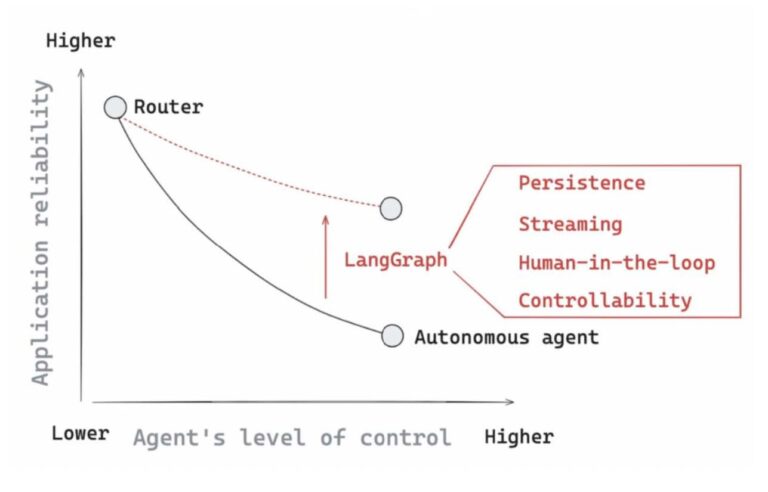

Frameworks like LangChain exist because of the reliability challenges rising from over-using AI agents. The following graph from LangGraph Academy shows that the more autonomy you give to AI agents, the less predictable your system becomes.

Every AI solution should have a human checkpoint – whether it’s reviews, retraining, or validation. Feedback loops keep the system honest and prevent drifting silently into nonsense (hallucinations). The goal should not be to remove humans, but to empower them with AI tools.

Step 5. Measuring what matters

Tracking vanity metrics like accuracy and precision often leads nowhere and are highly subjective. But simple measures like the following will give you a good idea how you are doing with your AI adoption journey.

- Number of manual steps reduced

- Number of human-hours reduced from doing grunt work

- Number of new clients acquired through automation

- Number of critical errors detected through automated log monitoring

And remember, if your attempt to adopt AI into your product’s lifecycle didn’t go as planned, it’s not a bad thing. The lessons learned will make your future adoption efforts stronger and more informed.